Risk Analysis in AI Translations: From Zero Errors to Fit-for-Purpose Quality

For decades, translation quality was judged by a single standard: error-free output. But in today’s AI-driven world — and with new frameworks like the EU AI Act reinforcing the need for transparency and risk management — that definition no longer fits. Businesses are dealing with a flood of multilingual content at unprecedented speed, and the question has shifted from “Is this perfect?” to “Is this good enough for the purpose it serves?”

This change doesn’t mean lowering standards — it means aligning them with the risk, purpose and audience of each text. A set of medical device instructions demands absolute precision, while an internal memo may only need clarity and speed. The real challenge is knowing how to classify content and assign the right translation workflow. Risk analysis provides the framework to do exactly that.

Redefining Quality in the AI Era

In traditional translation, quality was often measured by aiming for “zero error.” While this is critical for high-stakes content, it can be unnecessarily costly and slow for lower-risk documents.

The new paradigm is fit-for-purpose quality: translation accuracy and style should match the intended use and risk exposure.

- For regulatory or safety-critical documents, a single mistranslation can have severe legal or health consequences. Here, perfection is non-negotiable.

- For technical manuals or training content, clarity and accuracy matter, but some stylistic imperfections may be acceptable if the core message is correct.

- For internal communications or early-stage creative drafts, speed and accessibility may outweigh linguistic perfection.

By matching quality expectations to risk, companies can strike the right balance between efficiency and accuracy, avoiding wasted effort while strengthening trust in essential communications.

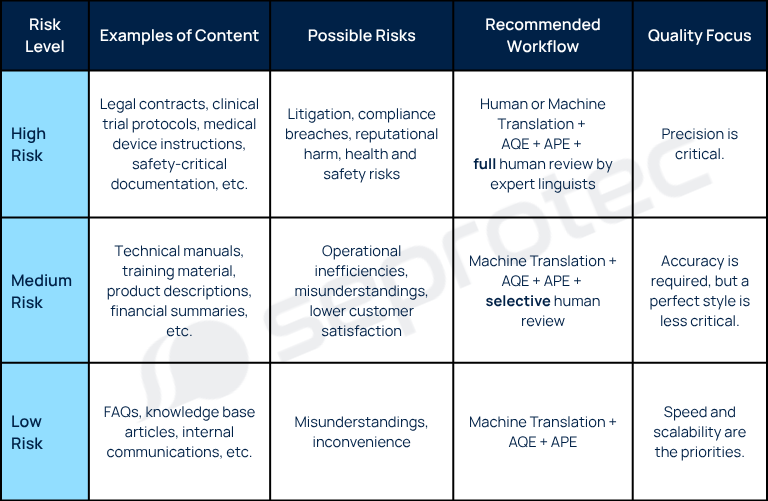

Step 1: Classify Content by Risk

The first and most critical step in risk analysis is recognizing that not all content carries the same weight. In the rush to globalize, many organizations still treat every translation project with the same level of scrutiny, but this can quickly drain time, budget, and resources.

By applying a structured classification, companies can prioritize effort and accuracy where they are truly needed while streamlining less critical content. A risk-based approach ensures that quality expectations are directly linked to the potential impact of errors.

- High-Risk Content: Legal contracts, clinical trial protocols, medical device instructions, safety-critical documentation.

- Risks: Litigation, compliance breaches, reputational harm, health and safety risks.

- Medium-Risk Content: Technical manuals, training material, product descriptions, financial summaries.

- Risks: Operational inefficiencies, misunderstandings, lower customer satisfaction.

- Low-Risk Content: FAQs, knowledge base articles, internal communications.

- Risks: Minimal; errors may cause inconvenience but rarely lead to severe consequences.

Step 2: Align Risk with the Right Workflow

Once you know the risk level of your content, different to each sector and company, the next step is to assign the right workflow. This is where many organizations stumble. They either overinvest in low-risk content, slowing down productivity and inflating costs, or underinvest in high-risk material, exposing themselves to compliance or reputational disasters.

The key is to match workflow intensity to the risk profile:

- High-Risk → Human-Centric Workflow

- Approach: Human or Machine translation combined with Automatic Quality Estimation (AQE) and targeted Automatic Post-Editing (APE), followed by full human review by expert linguists with subject-matter expertise.

- Why it matters: Even a small error could endanger patient safety, trigger regulatory fines, or cause product recalls. Combining AI tools with expert review ensures speed and consistency without compromising on accuracy.

- Medium-Risk → Hybrid Workflow

- Approach: Machine translation enhanced with AQE to detect risky segments, followed by targeted APE or selective human review.

- Why it matters: Accuracy is essential, but formulations don’t always need to be polished to perfection. Focusing human effort on the sections that matter most keeps the process efficient while maintaining reliability.

- Low-Risk → AI-First Workflow

- Approach: Secure raw machine translation supported by Automatic Quality Estimation (AQE) to flag potential issues. Automatic Post-Editing (APE) can be applied if needed, while human validation remains available for added assurance or compliance purposes.

- Why it matters: These texts benefit most from speed and scalability. AQE ensures that obvious risks are caught, and APE can automatically correct smaller issues. This gives companies confidence that even fast-turnaround content remains clear and usable, without over-investing in human resources.

As AI becomes a core part of multilingual content workflows, transparency will also play a key role. In line with the principles of the EU AI Act, companies should consider indicating when translations are produced with the help of AI. This small step helps maintain user confidence and supports responsible AI adoption across industries.

Step 3: Measure and Monitor Quality with the Right Tools

Designing workflows is only half the battle. To make them sustainable, organizations must measure quality continuously and feed those insights back into the process. Without monitoring, even the best workflows can drift into inefficiency or produce unexpected risks.

Several tools and methods can help:

- Edit-Distance Analysis: Measures how much a human editor changes MT output. A decreasing edit-distance over time indicates your AI engines are learning and improving.

- Automatic Quality Estimation (AQE): Provides real-time scoring of translation output without requiring a reference translation. It highlights “red flag” sections before they are published.

- Error Typology Tracking: Classifies errors into categories (terminology, grammar, style, accuracy). This helps teams identify recurring weaknesses in MT or post-editing processes.

- Continuous Feedback Loops: Every correction from human post-editors can be fed back into MT models, style guides or terminology databases, improving performance over time.

Taken together, these methods create a closed feedback system. They help companies track progress, understand where human effort is most valuable, and ensure that quality is consistently aligned with business goals.

Step 4: Build Governance and Continuous Improvement

Finally, risk analysis must be embedded in a governance framework to ensure it is applied consistently across the organization. Without governance, decisions will vary from project to project and undermine both quality and efficiency.

A strong governance model provides structure and accountability — essential in an environment where AI-driven processes are increasingly regulated. Frameworks such as the EU AI Act emphasize transparency, documentation, and proportional oversight depending on the level of risk associated with an AI system. Applying a similar mindset to multilingual workflows helps companies stay compliant while building internal trust in AI-supported translation.

A strong governance model should include:

- Workflow Allocation Rules: Clear policies defining which content categories fall into high, medium, or low risk and which workflows apply to each.

- Escalation Triggers: Criteria for when AI output must be escalated to full human review, for example if flagged by AQE or if specific terminology cannot be verified automatically.

- Monitoring Metrics: Benchmarks for quality, efficiency, and cost savings that are tracked and reported to management.

- Compliance Documentation: In regulated industries, keep a record of how translation quality was assured. This is invaluable during audits.

- Feedback and Improvement: Establish feedback channels between translators, reviewers, AI tools and end users. Governance should evolve as AI models improve and business needs change.

In conclusion, the way organizations define translation quality is changing. Instead of treating every piece of content as equally critical, leading companies are beginning to weigh accuracy requirements against the risks of getting it wrong. This shift reflects the direction of emerging frameworks such as the EU AI Act, which emphasizes transparency, accountability, and risk classification for AI systems. At Seprotec, we help organizations put this into practice. With more than 25 years of industry expertise in the language industry and our secure AI platform seprotec.ai, we deliver tailored future-proof solutions that combine human expertise with advanced AI technologies. This ensures every piece of content — from regulatory submissions to customer FAQs — reaches the right quality level for its purpose and is backed with ISO 27001 information security protocols.

There are no comments

Leave a comment